ISP: Multi-Layered Garment Draping with Implicit Sewing Patterns

Ren Li, Benoit Guillard, Pascal Fua

Abstract

We introduce a learned parametric garment representation model that can handle multi-layered clothing. As in models used by clothing designers, each garment consists of individual 2D panels. Their 2D shape is defined by a Signed Distance Function, and 3D shape by a 2D to 3D mapping. We show that this combination is faster and yields higher quality reconstructions than purely implicit surface representations. It makes the recovery of layered garments from images possible thanks to its differentiability, and the 2D parameterization enables easy detection of potential collisions. Furthermore, it supports rapid editing of garment shapes and texture by modifying individual 2D panels.Example

|

|

|

An open vest is reconstructed with either (a) an implicit surface representation (MeshUDF, [MU]), or (b) our method.

Using (a), the shape has wavy and irregular borders, and the procedure is slow. In contrast, our method (b) quickly obtains a more regular mesh.

Approach

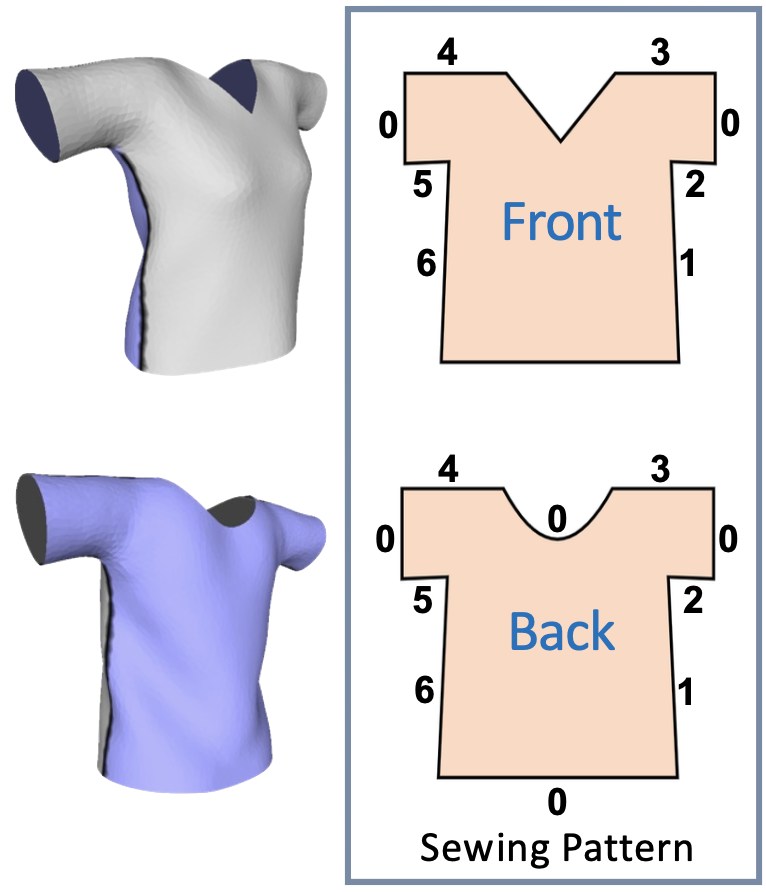

We represent 3D digital garments as flat sewing patterns that are deformed and stitched together. For example, this shirt is naturally decomposed into a front and a back panel, shown in grey and purple. When flattened, each panel has a distinct shape, and the numbers indicate which edges align and need to be stitched together.

Draping

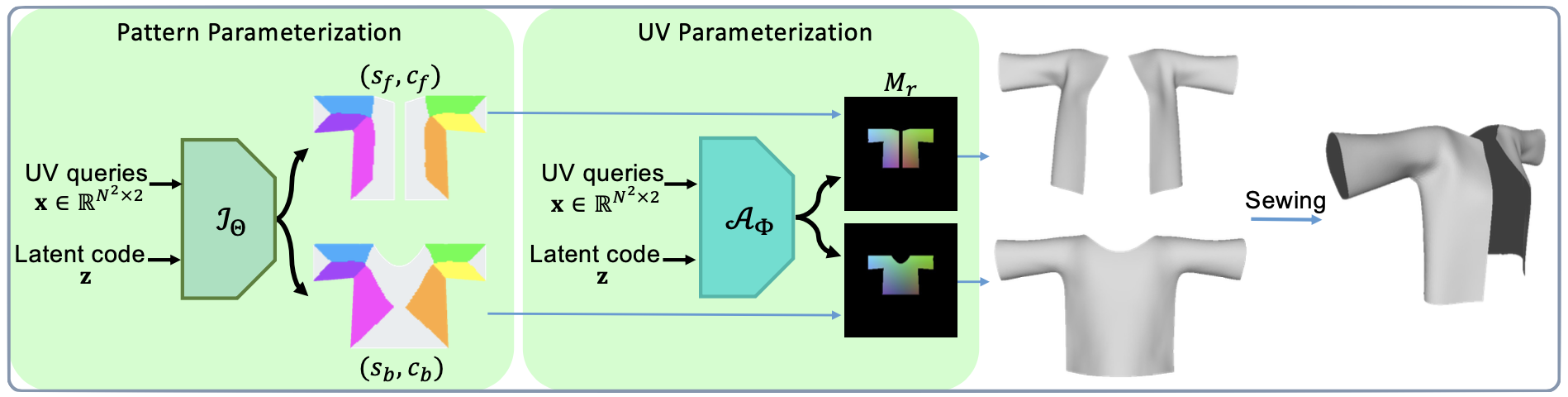

In order to fit garments onto different body poses and shapes, we adopt techniques from both DrapeNet and SNUG. We train a deformation network using a self-supervised approach. What sets our method apart is a new parameterization that allows us to extend the draping process across multiple layers. We base our reasoning on the UV-maps of each panel to easily detect intersections. When found, these intersections can be corrected using another neural network, specifically a 2D CNN. This results in an iterative process where garments are draped sequentially according to their natural order.

Garment Modifications

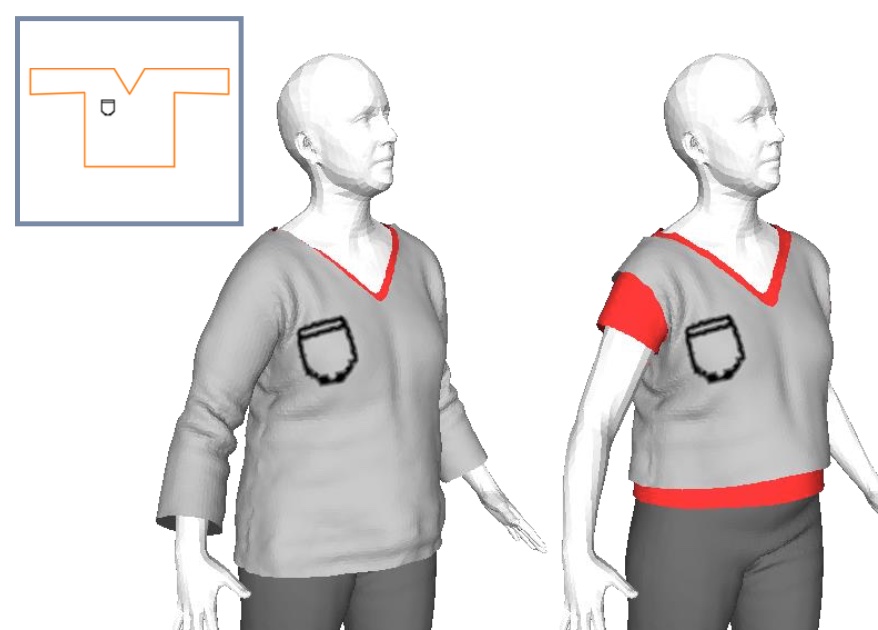

By working within the UV space of our garment parameterization, we can easily make modifications. For instance, sketching on a single pattern results in a textured 3D garment. This texture can also be transferred to additional garments by simply changing the latent codes. Textured patterns can also be used to create new garment visuals.

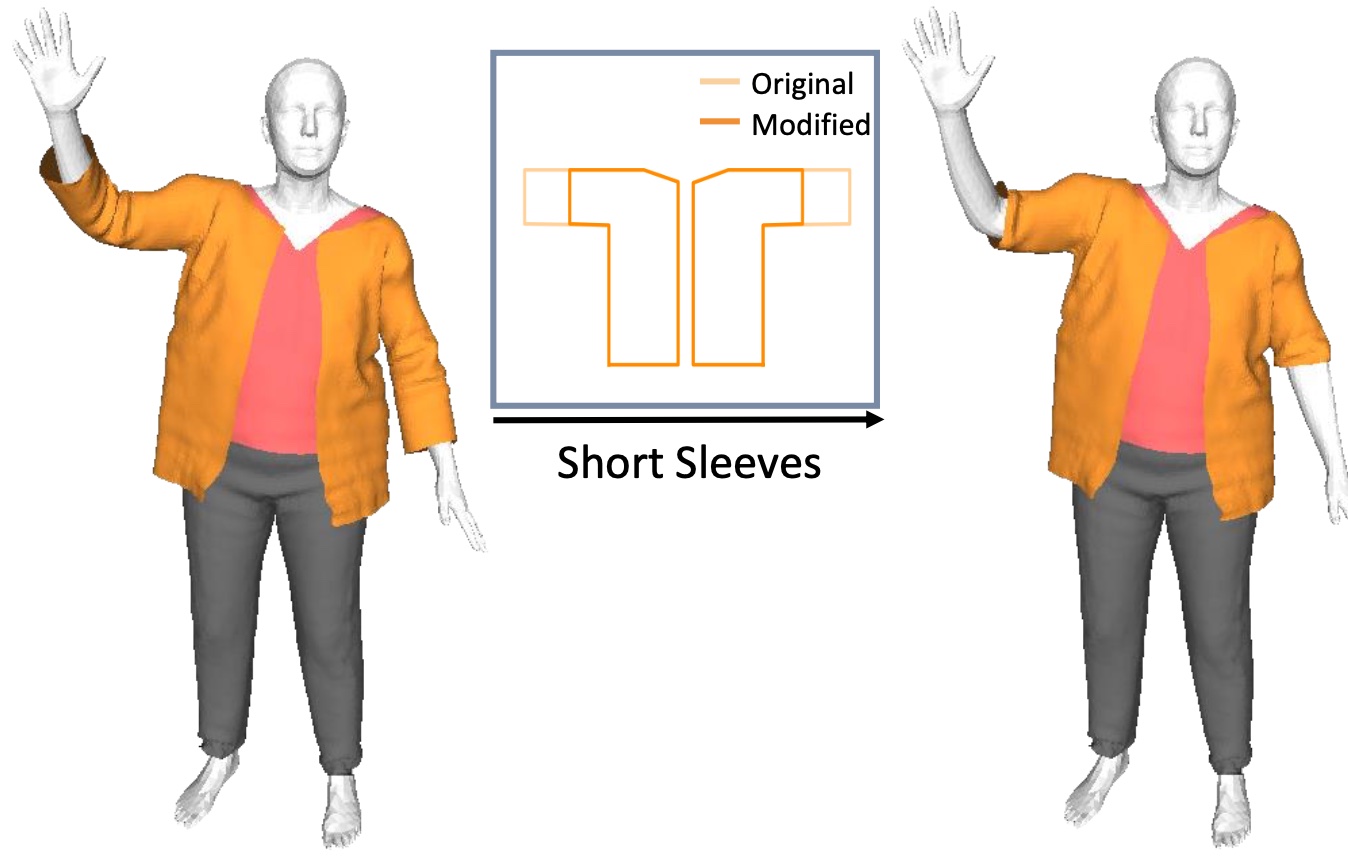

We can also easily manipulate the garment 3D shape by editing the panels. Our sewing pattern representation makes it easy to specify new edges by drawing and erasing lines in the 2D panel images, by simply optimizing a 2D Chamfer distance.

BibTeX

If you find our work useful, please cite it:@article{li2023isp,

author = {Ren, Li and Guillard, Benoit and Fua, Pascal},

title = {ISP: Multi-Layered Garment Draping with Implicit Sewing Patterns},

journal = {Advances in Neural Information Processing Systems},

year = {2023}

}

References and related work

[MU]

MeshUDF: Fast and Differentiable Meshing of Unsigned Distance Field Networks, Guillard et al., ECCV 2022.

[AN]

AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation, Groueix et al., CVPR 2018.

[NSM]

Structure-Preserving 3D Garment Modeling with Neural Sewing Machines, Chen et al., NeurIPS 2022.